the F in foom stands for frog boiling

By Tess |

January 29, 2026

the O stands for oh, huh.

the other O stands for oh no.

nobody knows what the M stands for, but it's generally agreed to be beyond human comprehension.

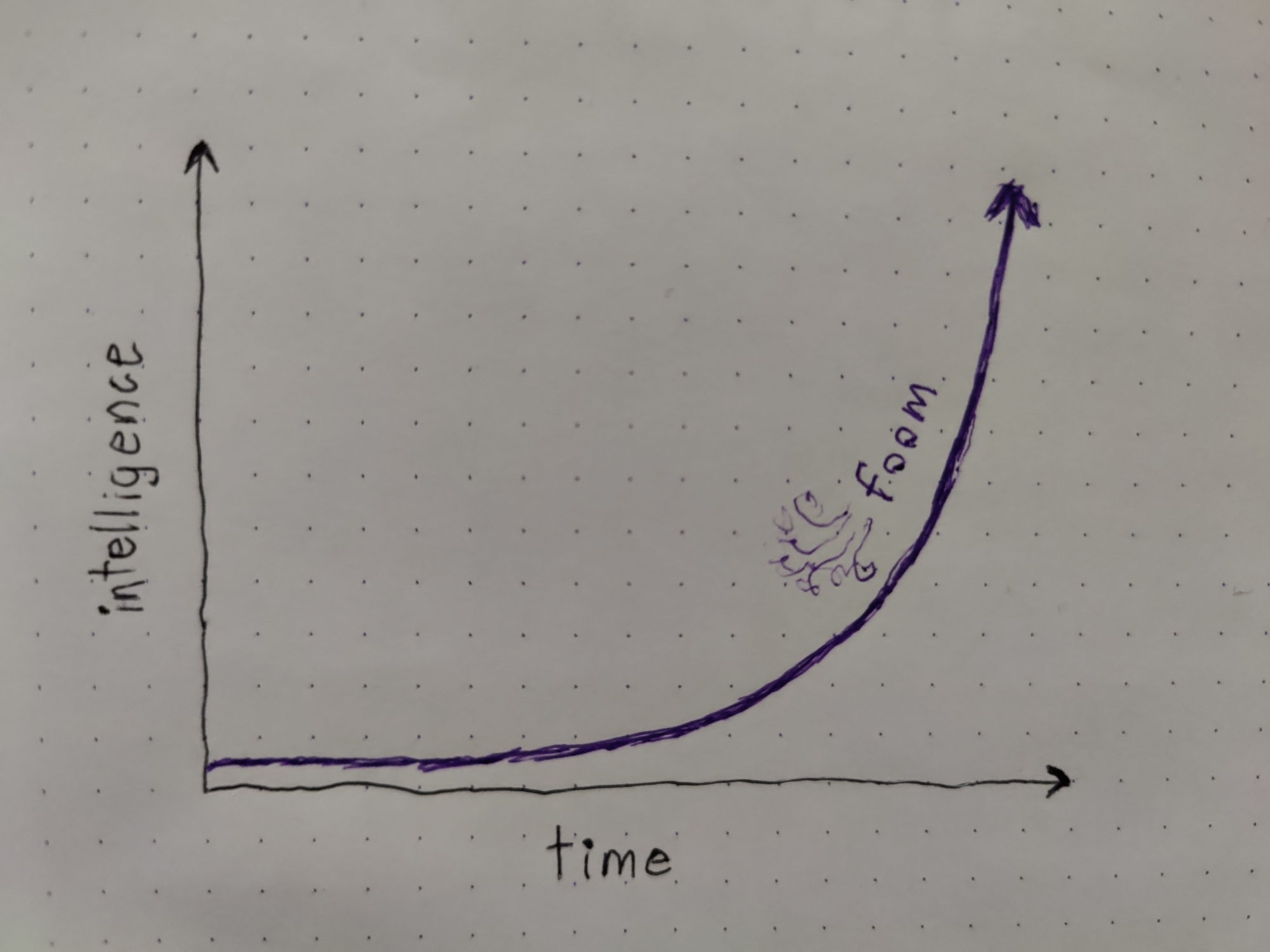

I think recently—within the last couple months—we hit a critical point. I know, I know. exponential pattern continues to be exponential. singularity is near. what else is new? well my personal odds of foom from the current thing have jumped significantly, after being stably low for some time. what changed is this: it seems quite plausible that development on LLMs and LLM-based "tools" is accelerating, and that acceleration is primarily driven by said tools.

I don't believe this was the case until recently, and I don't believe it was obviously inevitable until it happened (if it has, indeed, happened). a lot of people will say it was. there are a lot of incentives (both monitory and attentional) leading to AI maximalists being very loud. I've had serious doubts about the ceiling of LLM capabilities since the beginning, and I maintain that position was reasonable based on knowable information. I also maintain that they weren't very capable of real software engineering until around Opus 4.5. however, I think the hour of the "LLMs are good at coding" stopped clock community may have now finally arrived.

this is based primarily from the vibes coming out of the big labs and Gas Town.

I don't know what the labs are doing, but there seems to have been a shift from "yeah, we tooootally use LLMs for hard engineering tasks. because LLMs are so great. give us money" to the same thing but more believable. this might be stronger on the product side than the research side, but it's not nonexistent on the research side. the real question is if models are significantly accelerating the train -> fine-tune -> deploy -> raise hype -> raise capital -> repeat loop. this isn't clear yet.

Gas Town is a vibecoded orchestrator. I don't think Gas Town is ready to take over the world, but it is the most sophisticated example I've seen of a self-improving (with human help) digital entity. if you can get the human out of the loop entirely and get a system like Gas Town to pay its own Anthropic bills, that system seems reasonably likely to foom.

the guy in charge of Claude Code says it would take him or one of his guys 1-2 years to fix GC spikes without LLMs. the Gas Town guy says he's "never seen the code". the humans closest to these systems are unwilling or unable to do software engineering, so how critical are they to the process really? there's only a handful of serious labs, but anyone can make a Gas Town. if any of them works without a human in the loop, it's not clear the system would even need to train or wait for better models.