pictures with cats in dating app profiles

By Tess |

August 07, 2024

how published research can lead us astray

a hypothetical

suppose you're a straight man, and you're single (for some of you, this may not take a great leap of imagination). also suppose you have a cat named Micromort.

you'd like to be less single (or at least less celibate), so you install the hot new dating app: Bink-2-Boink. you write a quick bio and add some pictures, but on the last slot you pause. you've got a good picture of yourself snuggled up with little Mort, but is that the right choice? women tend to like animals, right? but what does having a cat signal about you? some people might make a snap decision based on their gut, but not you. it's a brutal dating market out there, and you need all the alpha you can get. you decide to consult the literature.

you're doubtful you'll find much interesting, but luck is on your side! you quickly discover a study with the exact data you're looking for1, and it had some surprising conclusions:

Men holding cats were viewed as less masculine; more neurotic, agreeable, and open; and less dateable

you chose a different picture, glad you put in the effort to overcome your faulty assumptions.

1 Kogan L, Volsche S. Not the Cat's Meow? The Impact of Posing with Cats on Female Perceptions of Male Dateability. Animals (Basel). 2020 Jun 9;10(6):1007. doi: 10.3390/ani10061007. PMID: 32526856; PMCID: PMC7341239.

analysis

let's get something out of the way, "Not the Cat's Meow?" is a bad study. your character made a mistake by paying attention to it. CNN may not have noticed, but surely the real you would have, right? by choosing this example I'm certainly not steelmanning science, but there is something to be learned here besides the fact that junk science exists.

the interesting bit is where the study went wrong. of all the little problems (small sample size, questionable methods, etc), one stands out.

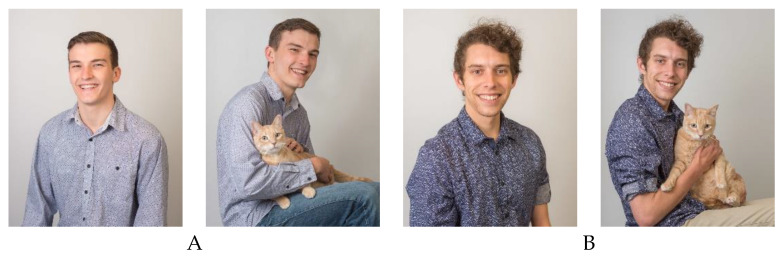

these were the pictures used in the survey. these are not good pictures.

of course women think these men with cats are neurotic, because who takes professional-looking pictures in a studio while clinging to a cat that obviously doesn't want to be there??

scientists, reviewers and individuals like you can systematically identify certain patterns of scientific malpractice, such as failing to control for an important variable. but this isn't a loose variable, it's is a set of constants locked in the wrong place. they may have correctly determined a correlation between cat pictures and datability within a specific region of the dating profile space, but the space is large and high-dimensional and the region they've chosen to study probably doesn't generalize.

an important note is the authors didn't choose this region due to bad luck or bad intuition, they chose it in order to make the more legible aspects of their study higher quality. the decision to take pictures in a studio seems to have been driven by a desire to minimize independent variables. in cases like this, the systems we've adopted to raise the quality of science simply optimize for problems that are harder to spot.

a typical human is pretty good at determining if a portrait has fucked up vibes. Lori and Shelly may have missed the problem, but this time you can probably see it. but what happens when a similar mistake is made in a field you're not a subject matter expert in? what happens when nobody is capable of simply intuiting that the assumptions of an experiment don't generalize?

the scary thing is, nothing. the study gets published and people believe wrong things. this happens constantly. my impression is basically all of psychology is this sort of thing, and it's pervasive in other fields as well.

conclusion

published studies are a useful tool. they're also useful for bashing your finger with. don't repeatedly bash your finger.

the decision making loop of a practical rational agent does include incorporating published research, but being discerning about what research to incorporate and properly contextualizing it is essential.

critically, it's not always optimal to evaluate a study using only the criteria sanctioned by science. if your goal is truly making optimal decisions, you've got to build up your own systems for deciding when to pay attention to research, and when to disregard it.