foom on carbon

By Tess |

February 18, 2024

why let matrices have all the fun?

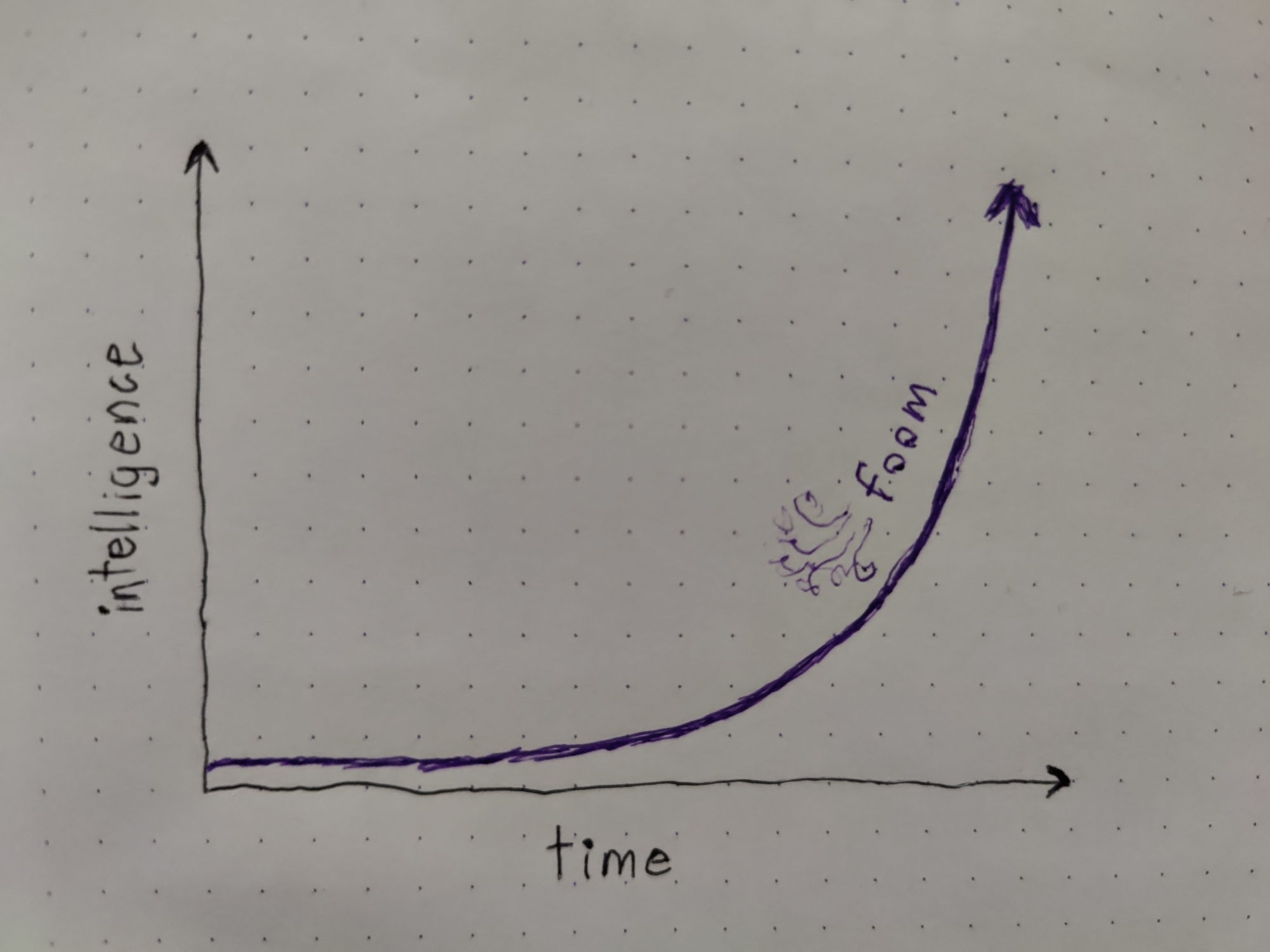

foom

aka fast takeoff. the theory goes something like this:

humans are smart. humans make AIs. as of early 2024 the AIs are less smart than the smartest humans at most general tasks, but at some point they will surpass us. the AIs will then build smarter AIs than the humans could. those AIs will rapidly develop even smarter AIs.

assuming infinite intelligence is possible and the intelligence/power conversion rate doesn't asymptote, we now have AI gods.

this chain of reasoning is one pillar of a specific sect of AI doomers, however AI doomers and AI doom is not the subject of this blogpost.

carbon

ie the basis of biological life on earth (in contrast to AIs, which run on silicon chips). carbon-based humans, if you recall, are smarter than the smartest AIs in many ways (again, as of early 2024). if you find the above logic compelling, then why does the first step need to involve jumping substrates? why do we need the silicon?

biological vs cognative

if we're going to use our existing abilities to recursively enhance those same abilities, we have two potential approaches. we can make physical changes/enhancements to our biology, or we can iterate on our cognitive processes. drugs can be effective and I'm all for brain implants (as long as I have root on them, obviously), but iteration loops are slow. perhaps the biological approach will seem more attractive once we're running up against the limits of stock brains, but I think there's a lot to be done in cognative infrastructure first.

so what does this actually look like?

understanding yourself and your patterns at multiple levels of abstraction. recognizing what works and what doesn't, and iterating in a productive direction. this twitter thread is roughly my template (I'll write this up into another post here at some point).

what distinguishes foom-targeting iteration from general personal growth techniques is an emphasis on recursive self-improvement. you want to be doing hard things that make you better at doing hard things, in the abstract. which is itself, obviously, a hard thing. anything non-exponential and not in service of exponential gains is considered inconsequential. no matter how big the constant or multiple is it will be rendered negligible in the long run.

is foom on carbon possible?

probably in some sense, and in some sense not. I highly doubt full doubling-intelligence-every-X-seconds-forever level foom is possible on unaltered carbon (or any substrate for that matter). even if I observe evidence of god-level foom I will have to disbelieve it, since schizophrenia would be a more likely explanation (yay bayesian reasoning 😠).

that being said, some amount of using ideas you've figured out to figure out more useful ideas is certainly possible. the question is how far can we push it, and does focusing on that outperform other strategies?

one way to find out.